Hi, I'm Ziwei.

I am a fourth-year Ph.D. student in Computer Science at Harvard University, advised by Dr. Elena Glassman.

Currently, my research focuses on augmenting human cognition and efficiency by leveraging large language models (LLMs) and interactive techniques, aiming to ensure that potential AI errors can be easily noticed, judged, and recovered from, a concept I formalize as AI-resiliency.

Before coming to Harvard, I graduated with a bachelors in Mathematics and Computer Science (December 2020) and masters in Computer Science (May 2021) from Cornell University.

|

|

|

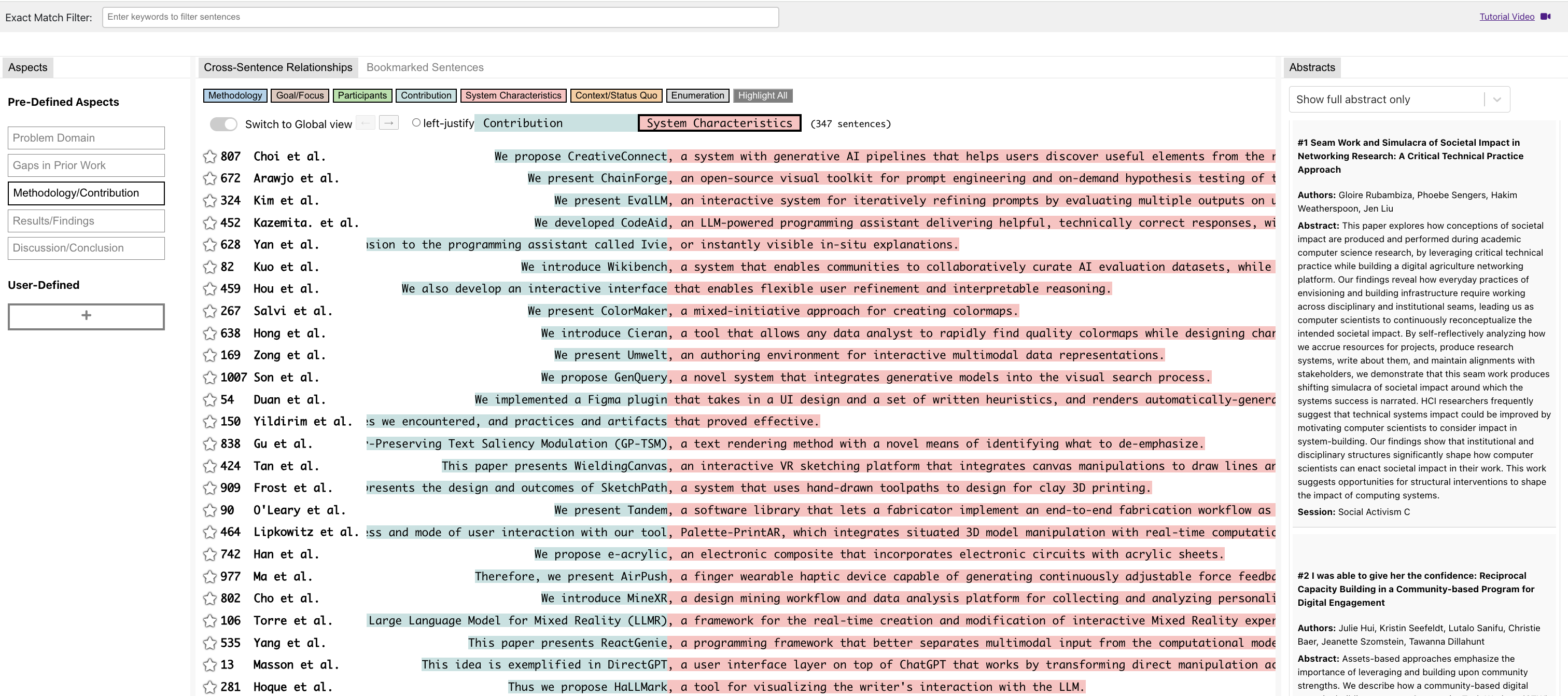

AbstractExplorer: Leveraging Structure-Mapping Theory to Enhance Comparative Close Reading at Scale

Ziwei Gu,

Joyce Zhou,

Ning-Er (Nina) Lei,

Jonathan K. Kummerfeld,

Mahmood Jasim,

Narges Mahyar,

Elena L. Glassman

UIST 2025

paper

/

video

Reading documents is too cognitively demanding to do at scale. But what if the documents share a common structure? We design a system that enables comparative close reading of similarly structured documents at scale.

|

|

|

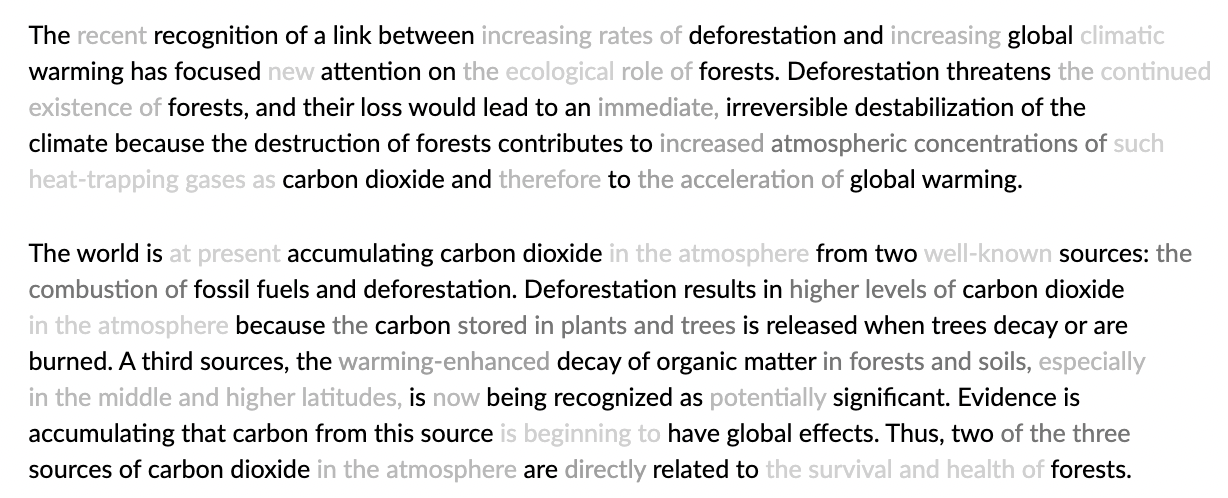

Why Do Skimmers Perform Better with Grammar-Preserving Text Saliency Modulation (GP-TSM)? Evidence from an Eye Tracking Study

Ziwei Gu,

Owen Raymond,

Naser Al Madi,

Elena L. Glassman

CHI 2024 Late Breaking Work

paper

/

video

Through an eye-tracking study, we uncover unique gaze patterns when people read with GP-TSM, an LLM-powered reading support tool, and explain how it shapes the reading experience.

|

|

|

An AI-Resilient Text Rendering Technique for Reading and Skimming Documents

Ziwei Gu,

Ian Arawjo,

Kenneth Li,

Jonathan K. Kummerfeld,

Elena L. Glassman

CHI 2024

paper

/

video

Reading is challenging. AI summaries can be helpful but also unreliable. We design an LLM-powered technique that enhances reading efficiency while making readers resilient to errors in summaries.

|

|

|

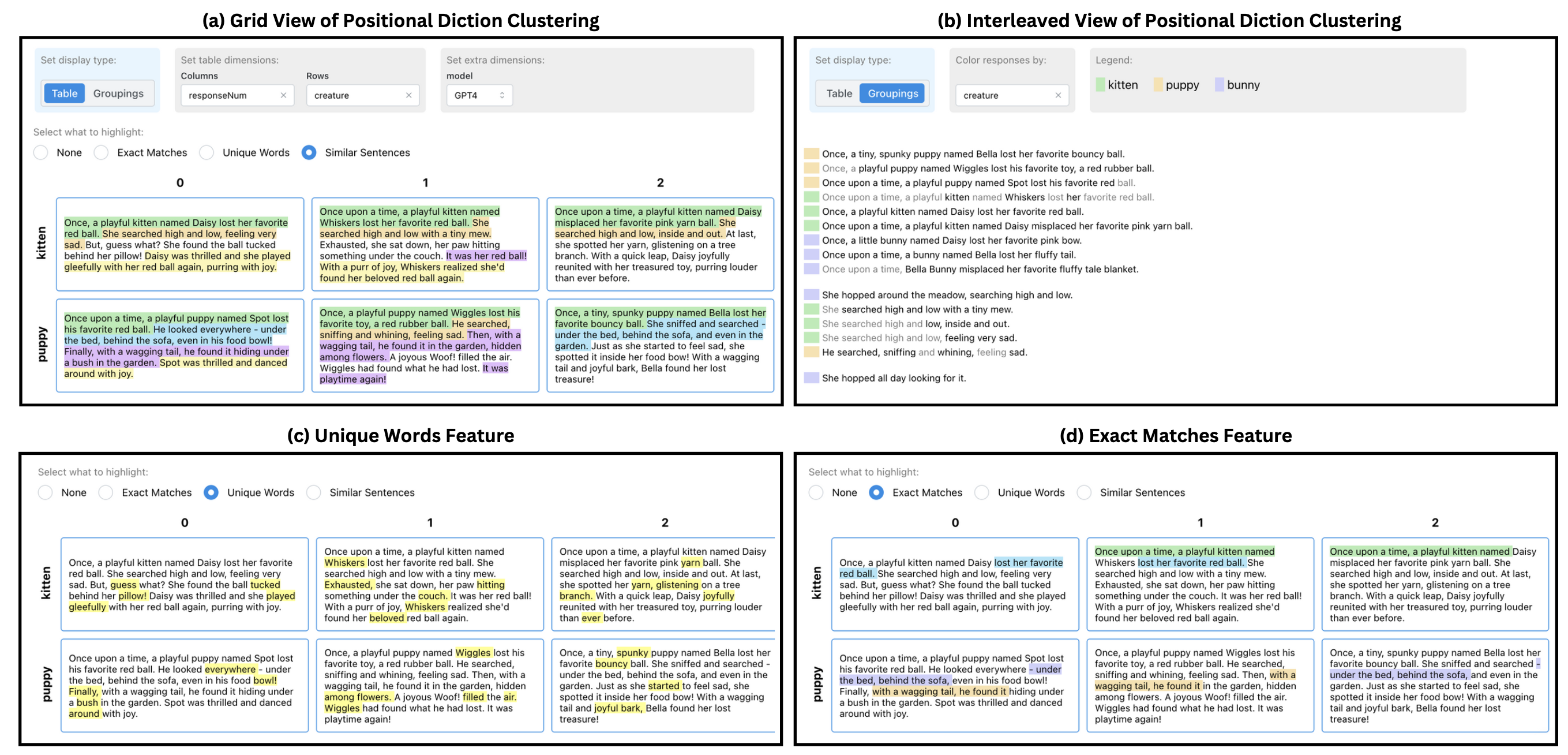

Supporting Sensemaking of Large Language Model Outputs at Scale

Katy Ilonka Gero,

Chelse Swoopes,

Ziwei Gu,

Jonathan K. Kummerfeld,

Elena L. Glassman

CHI 2024

Honorable Mention Award

paper

Large language models (LLMs) are capable of generating multiple responses to a single prompt. In this paper, we explore how to present many LLM responses at once to support sensemaking at scale.

|

|

|

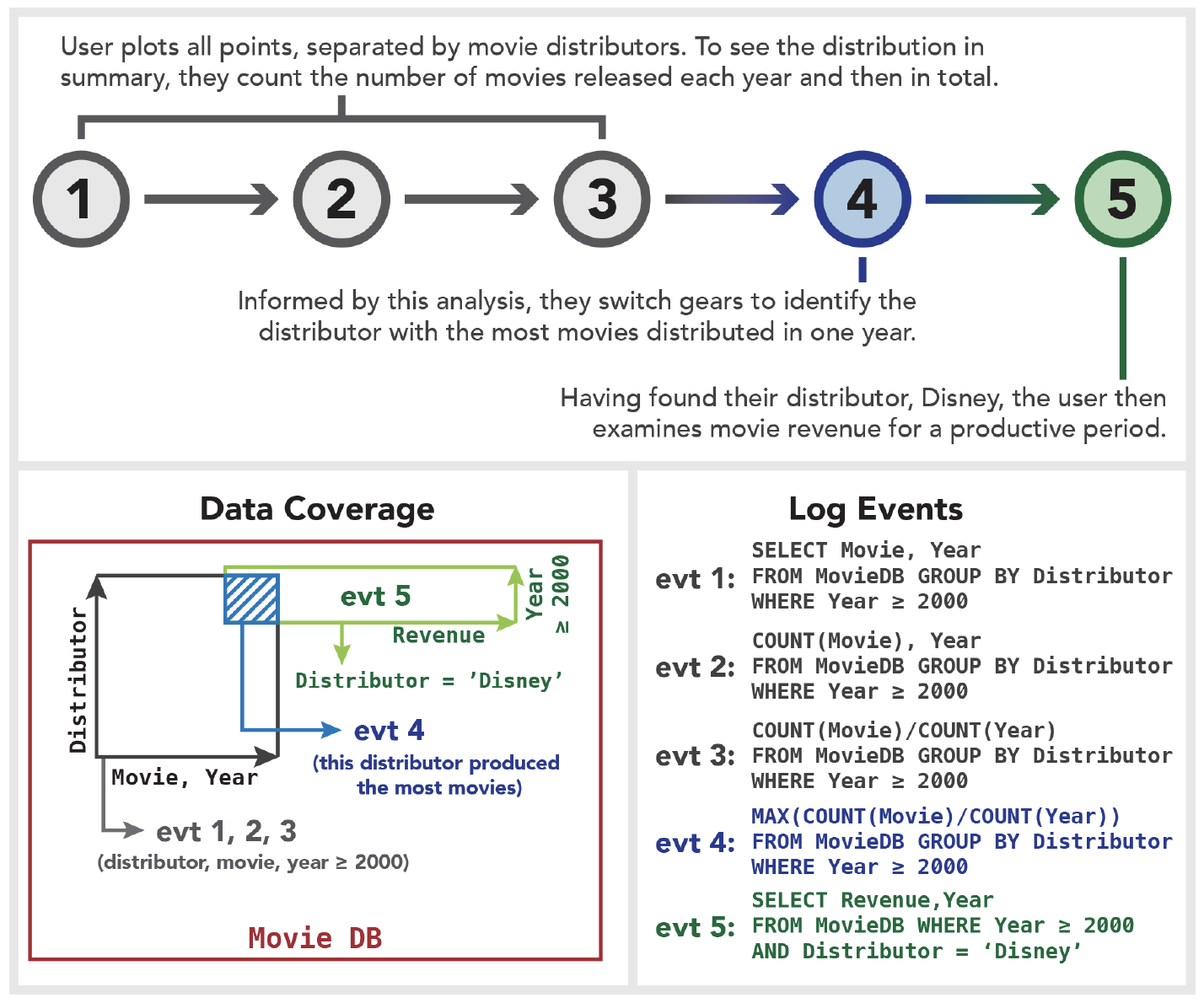

Tessera: Discretizing Data Analysis Workflows on a Task Level

Jing Nathan Yan,

Ziwei Gu,

Jeffrey M Rzeszotarski

CHI 2021

paper

/

video

/

slides

Interaction logs are useful but can be extremely complex. We break down event logs into goal-directed segments to make it easier to understand user workflow.

|

|

|

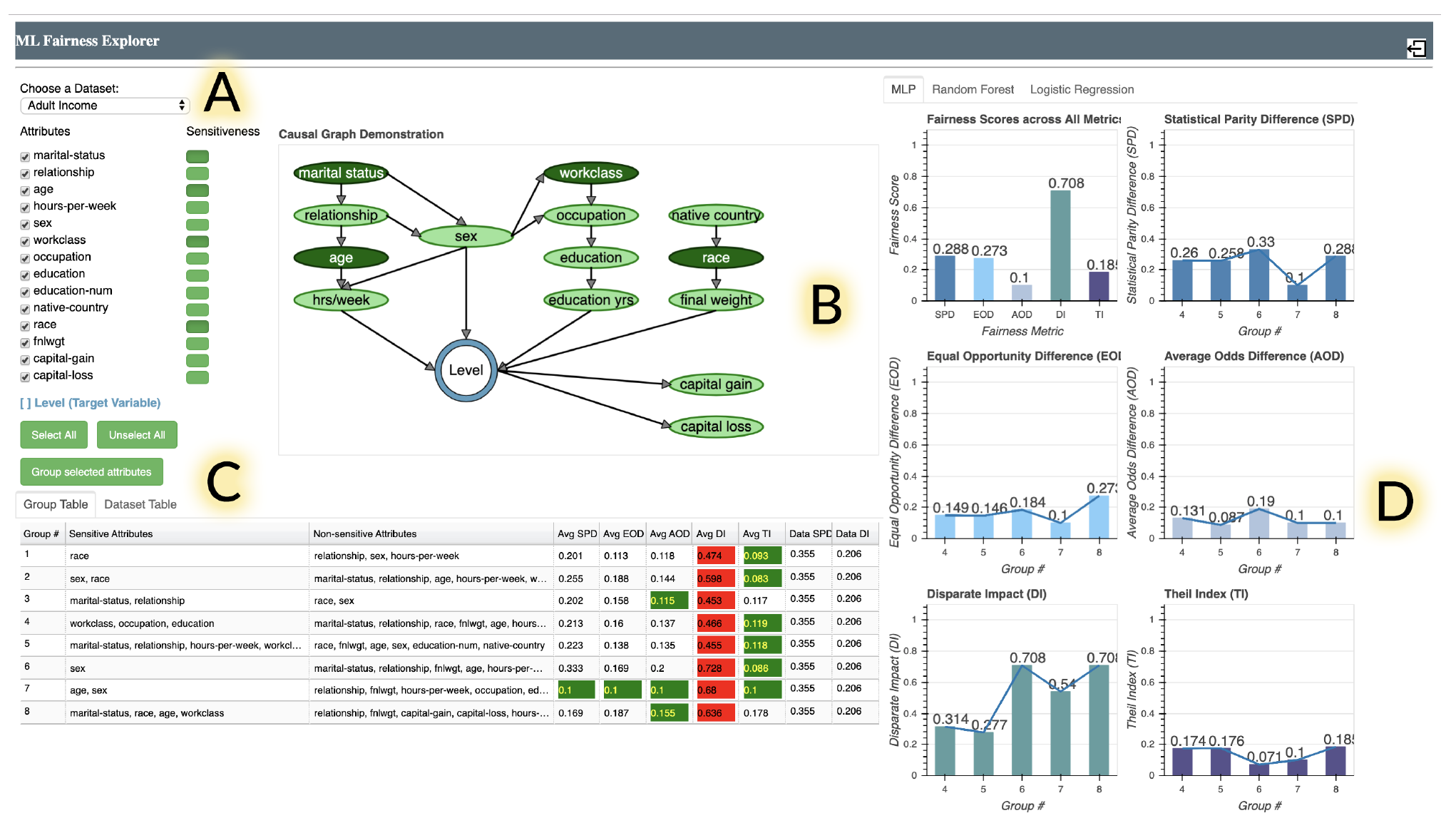

Understanding User Sensemaking in Machine Learning Fairness Assessment Systems

Ziwei Gu,

Jing Nathan Yan,

Jeffrey M Rzeszotarski

WWW 2021

paper

/

video

/

slides

How do core design elements of debiasing systems shape how people reason about biases? We find distinctive sensemaking patterns for different systems through a think-aloud study.

|

|

|

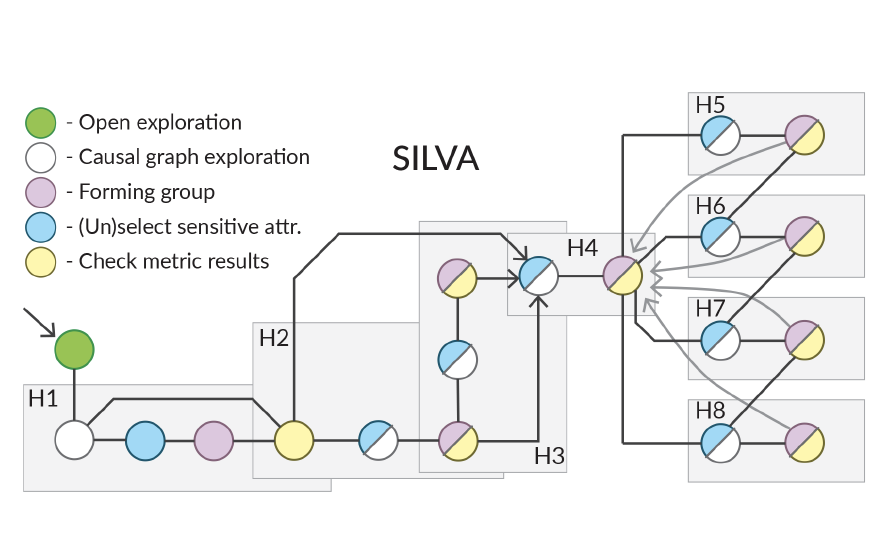

Silva: Interactively Assessing Machine Learning Fairness Using Causality

Jing Nathan Yan,

Ziwei Gu,

Hubert Lin,

Jeffrey M Rzeszotarski

CHI 2020

paper

/

short video

/

long video

/

slides

We present Silva, an interactive tool that utilizes a causal graph linked with quantitative metrics to help people find and reason about sources of biases in datasets and machine learning models.

|

|